There are k! substitution ciphers for an alphabet with k letters—too many for an exhaustive search. With a frequency-based approach adapted to the graph of alphabetic ciphers, we redefine the act of deciphering as a sampling problem suitable for a Metropolis-Hastings random walk. A substitution cipher is thus solvable with a Markov chain.

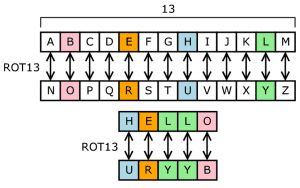

Let’s begin with a review of some basic cryptography: a simple substitution cipher replaces letters one-for-one in the target language. There are thus possible English substitution ciphers.

Brute force

With the set of all ciphers

we shuffle through by reassignment of letters in a guess

,

One way of reassignment is by rotation as with the Caesar cipher.

For a brute force tactic the objective is to overwhelm the solution space. With possible English substitution ciphers this is unfeasible, so we turn to methods such as frequency analysis to make educated guesses.

Assign keys to letters—evaluate, and repeat until a pattern emerges from the yet-to-be decrypted text.

Scoring a Key

We can evaluate a cipherkey’s fitness by comparing the distributions of letter and letter-to-letter frequencies. If we swap two letter assignments, the change in fitness is measured as a difference between corresponding frequency tensors.

To do so efficiently, we swap the corresponding row/column pairs and recompute only the impacted entries.

First order frequency matrix

The first-order frequency tensor is a matrix. For the letter ‘a’ it measures the distribution of letters ‘a’–’z’ that come after it. This is equivalent to counting occurrences of digrams ‘aa,’ ‘ab,’ … , ‘az’ in the cipher text.

The evaluated ciphertext is compared against a baseline: assuming we’re tracking the first-order letter-to-letter frequencies, we obtain the frequency tensor by counting the digrams in a known English text. By comparing the digrams, we form a statistical foundation to solve the substitution cipher by Markov chain. A score is obtained as the cumulative difference between entries in both tensors.

Statistical Objective Function

By comparing scores, obtained from values representing frequencies, we construct the test from Statistics. Define

for the first-order frequencies and English baseline

.

The solution to the cipher is the providing lowest discrepancy

from the baseline distribution of digram frequencies.

Global Optimization

In general, the solution key to a ciphertext provides a global optimum to the score function . If we were to choose a strict optimizer, and choose new cipher-letter assignments on a strict basis of improving the score

over some initial guess

, we would soon be trapped at one of many the many local optima to

. This task is thus ill-posed for hill-climb-like methods.

Instead we must allow our attempted solution to degrade at times—balancing exploration of the solution space with optimization of the score.

Bottlenecks

Solving a cipher one letter-swap at a time, 25/26 letters of the alphabet must be nailed down before we’re able to identify all 26.

In order to get to the appropriate solution, we must pass through certain regions, known as bottlenecks.

A strict optimizer has a hard time passing through the bottleneck. In scoring text, ehlloelhho

hello, so we become trapped at a local optimum. Solving a substitution cipher by Markov chain means we end up cycling between the best nearby solutions.

One way to do this is with a Metropolis-Hastings (MH) algorithm.

Sampling with Metropolis-Hastings

Suppose we want to sample from the stationary i.e. asymptotic distribution of an ergodic Markov chain.

From the Markov balance equation

define the probability we accept a new state

by the proportion

Here, can be any function assigning nonzero values to all states

.

is known as the proposal distribution, and is chosen with a specific problem in mind. For Metropolis-Hastings as a sampling method,

should be chosen to minimize the impact of starting guess

i.e. reduce the chain’s mixing time.

We will apply the Metropolis-Hastings algorithm to sample from the set of cipherkeys with good score. In particular, we’d like to compute the unique sample argmin. Using MH, we select new cipherkeys randomly, but with a configurable bias toward improvements in score.

Proposing a Key

Denoting the cipherkeys we will have guessed in

steps of our algorithm, define the probability

that we accept a new cipherkey. In the case of blindly choosing any one of the

keys with equal probability, we are sampling from a uniform stationary distribution of cipher keys

accepting any new keys with relative probabilities

where for any current key

and proposed

.

Controlling the Optimization Rate

For something beyond brute force, however, we can use an Ising model. The Ising model assigns each key proposal a probability depending on differences in score

and a “temperature” which we set aside as a free parameter of the optimization method. We accept a new key with probability

This produces a Metropolis-Hastings random optimizer that we can control through manipulating the Ising parameter . When

, we are strictly optimizing the score function

, and when

we are uniformly sampling all

cipherkeys.

The temperature can be varied on the go. This is a fascinating analogue to metallurgical annealing, where metals such as bronze, tin, and copper are put through controlled fluctuations in temperature. The process of varying

over optimization is the focus of Simulated Annealing.

![Rendered by QuickLaTeX.com \[\phi(\bold x) = \text{score} := \sum_{i\in I}\frac{(x_i - \mu_i)^2}{\mu_i} \sim \chi^2\]](https://www.alexwei.net/wp-content/ql-cache/quicklatex.com-39789e82d8d47262060ff464405f00c7_l3.png)

![Rendered by QuickLaTeX.com \[\frac{A(x|x_0)}{A(x_0|x)} = \frac{P(x)}{P(x_0)} \frac{g(x_0|x)}{g(x|x_0)}\]](https://www.alexwei.net/wp-content/ql-cache/quicklatex.com-2e7b1c325fba30abca8a04a5ac314347_l3.png)

![Rendered by QuickLaTeX.com \[\frac{A(x|x_0)}{A(x_0|x)} = \frac{P(x)}{P(x_0)} \frac{g(x_0|x)}{g(x|x_0)} = \frac{1}{1} \frac{g(x_0|x)}{g(x|x_0)} = 1\]](https://www.alexwei.net/wp-content/ql-cache/quicklatex.com-7098ca248b001a2b236d168d4eb48052_l3.png)